CC BY 4.0 (除特别声明或转载文章外)

如果这篇博客帮助到你,可以请我喝一杯咖啡~

llama from scratch

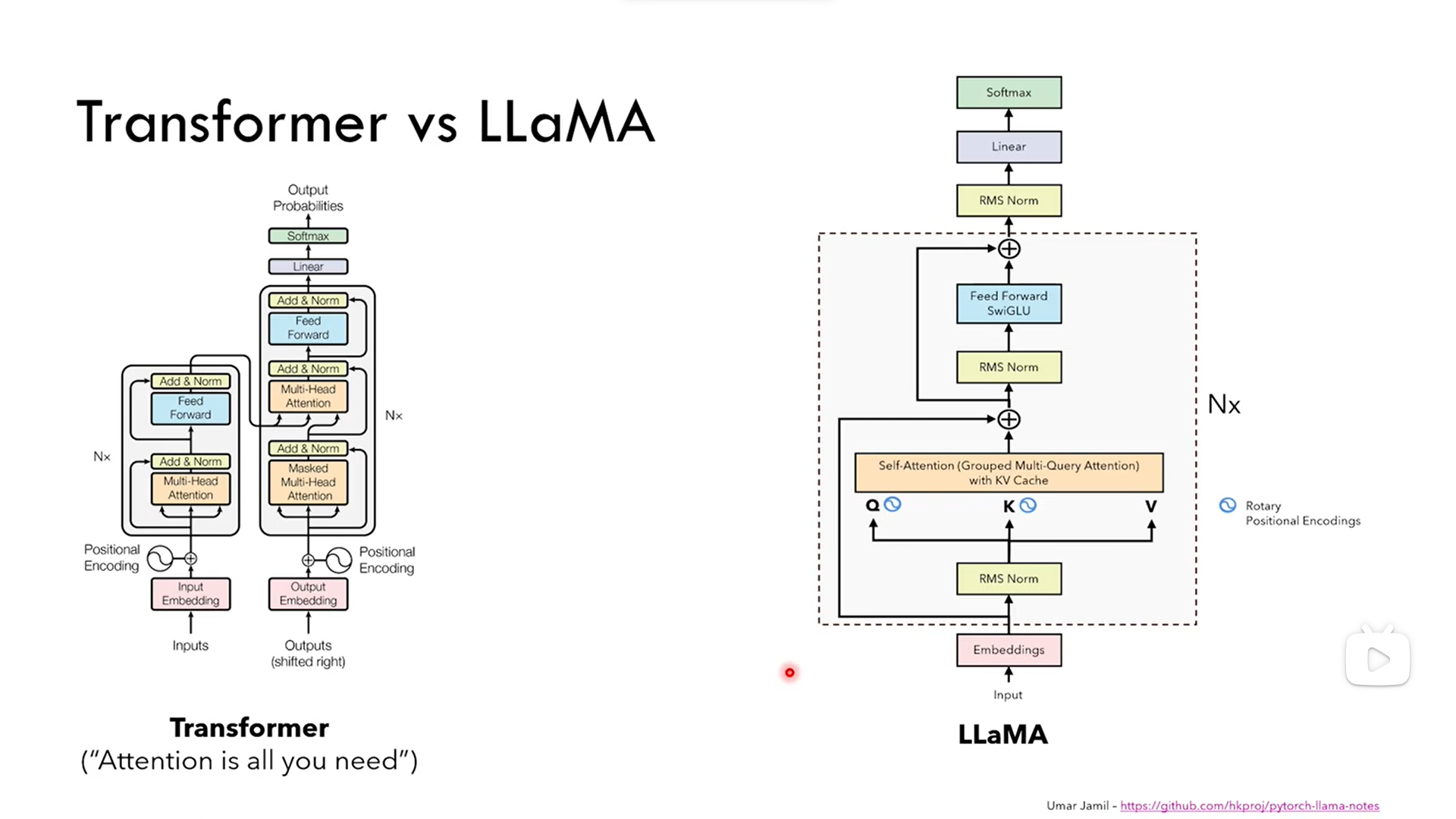

Transformers vs llama

normalization choice

Layer normalization or Root mean squear normalization

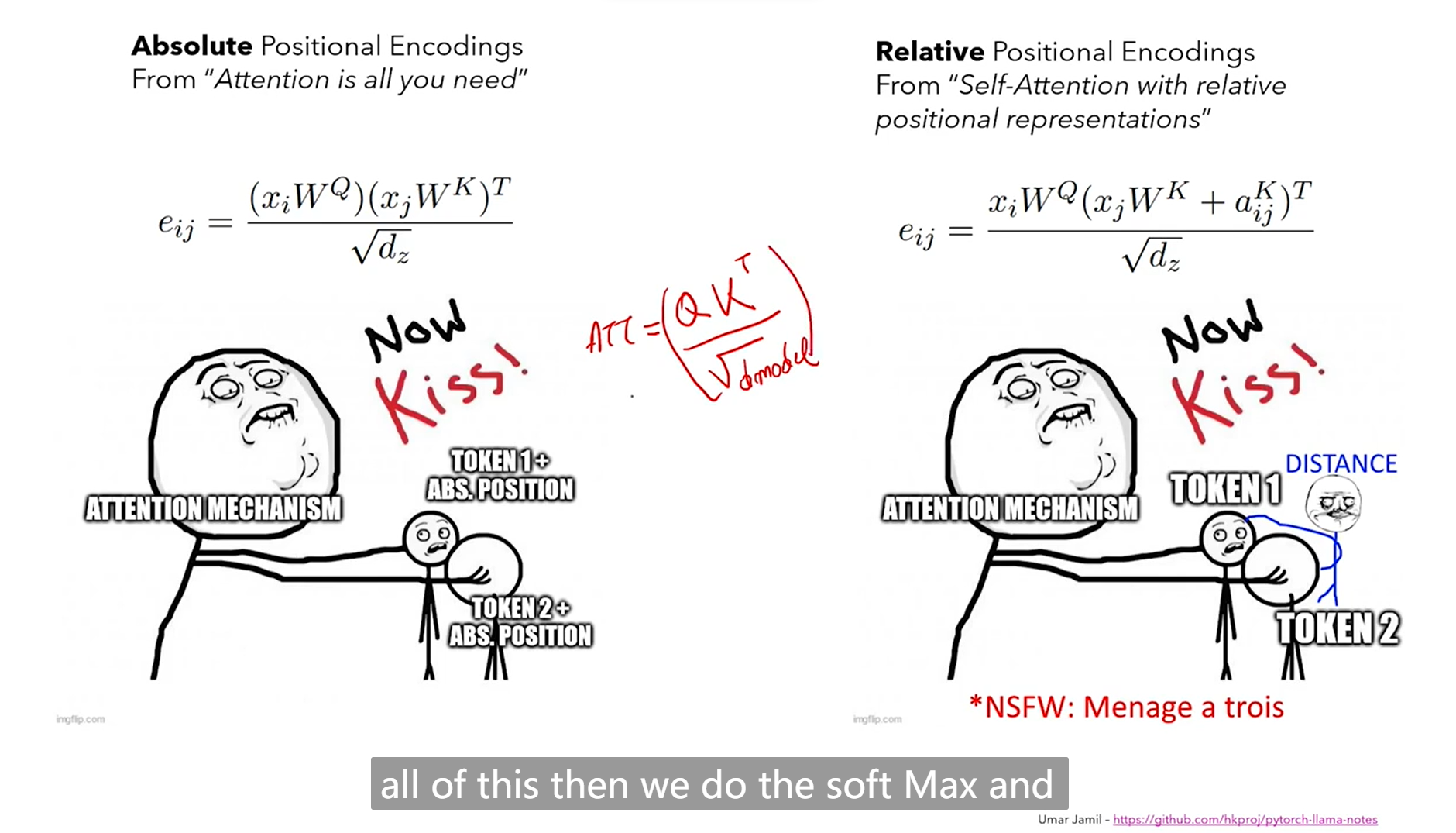

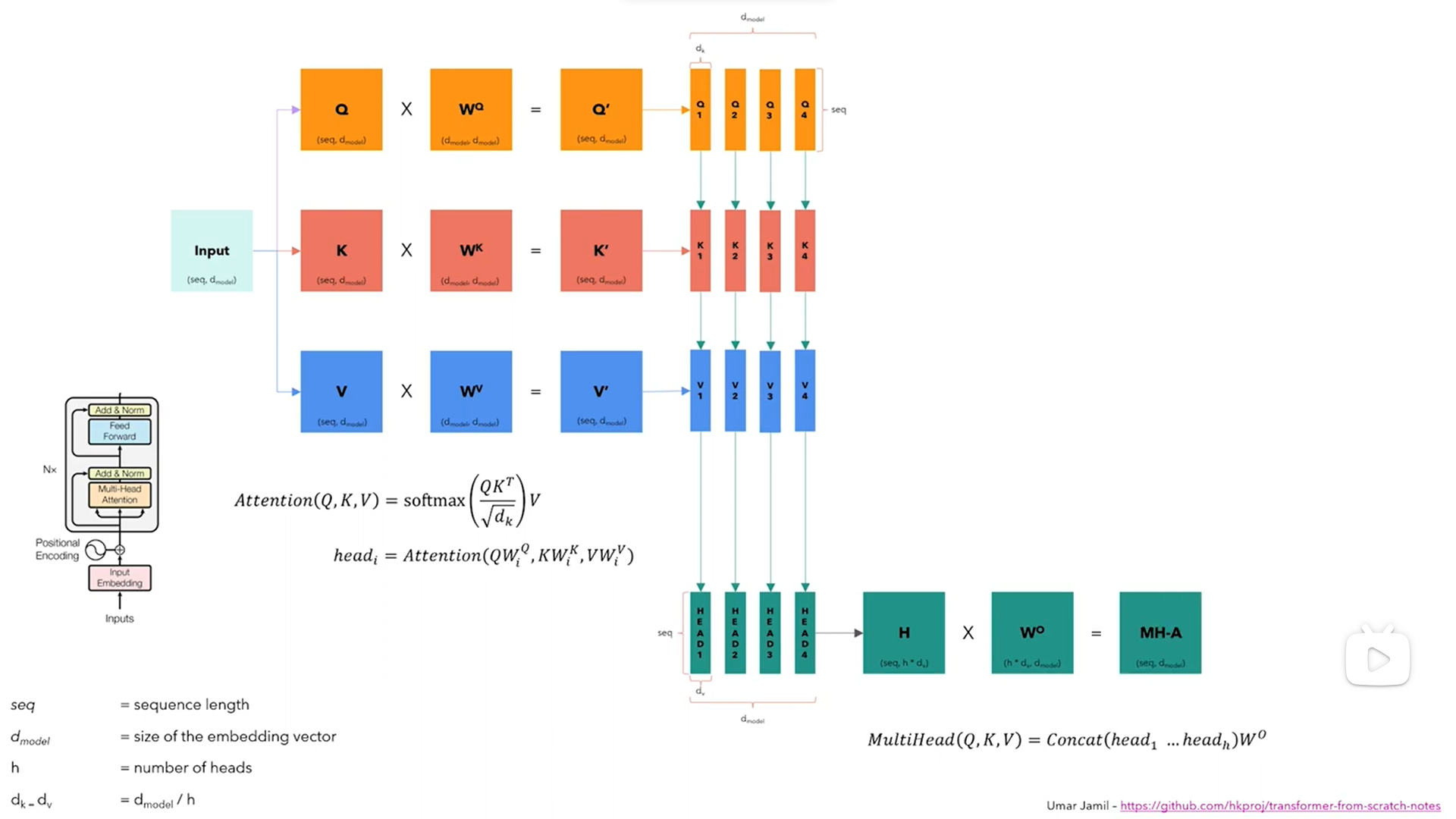

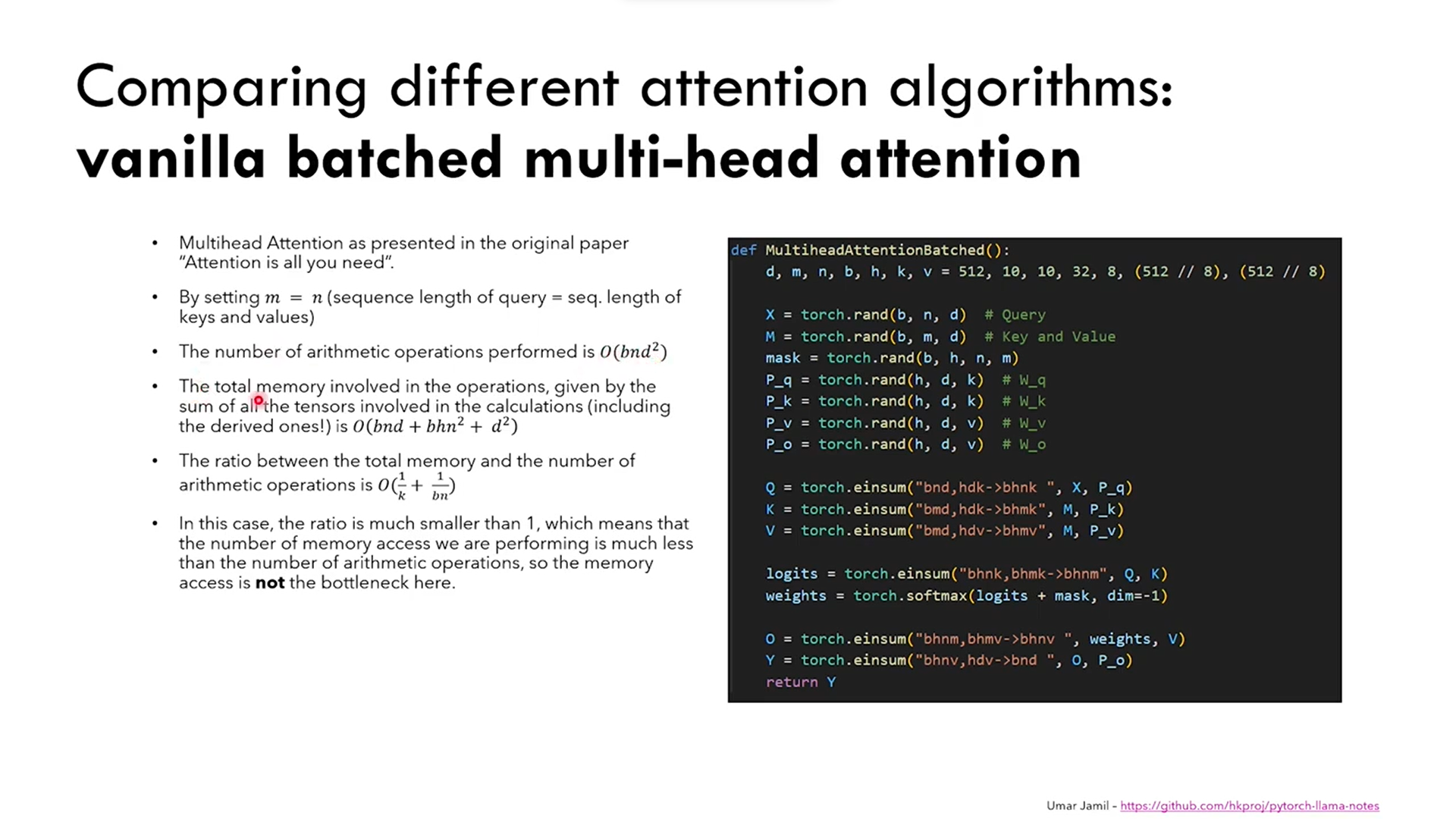

Attention mechanism

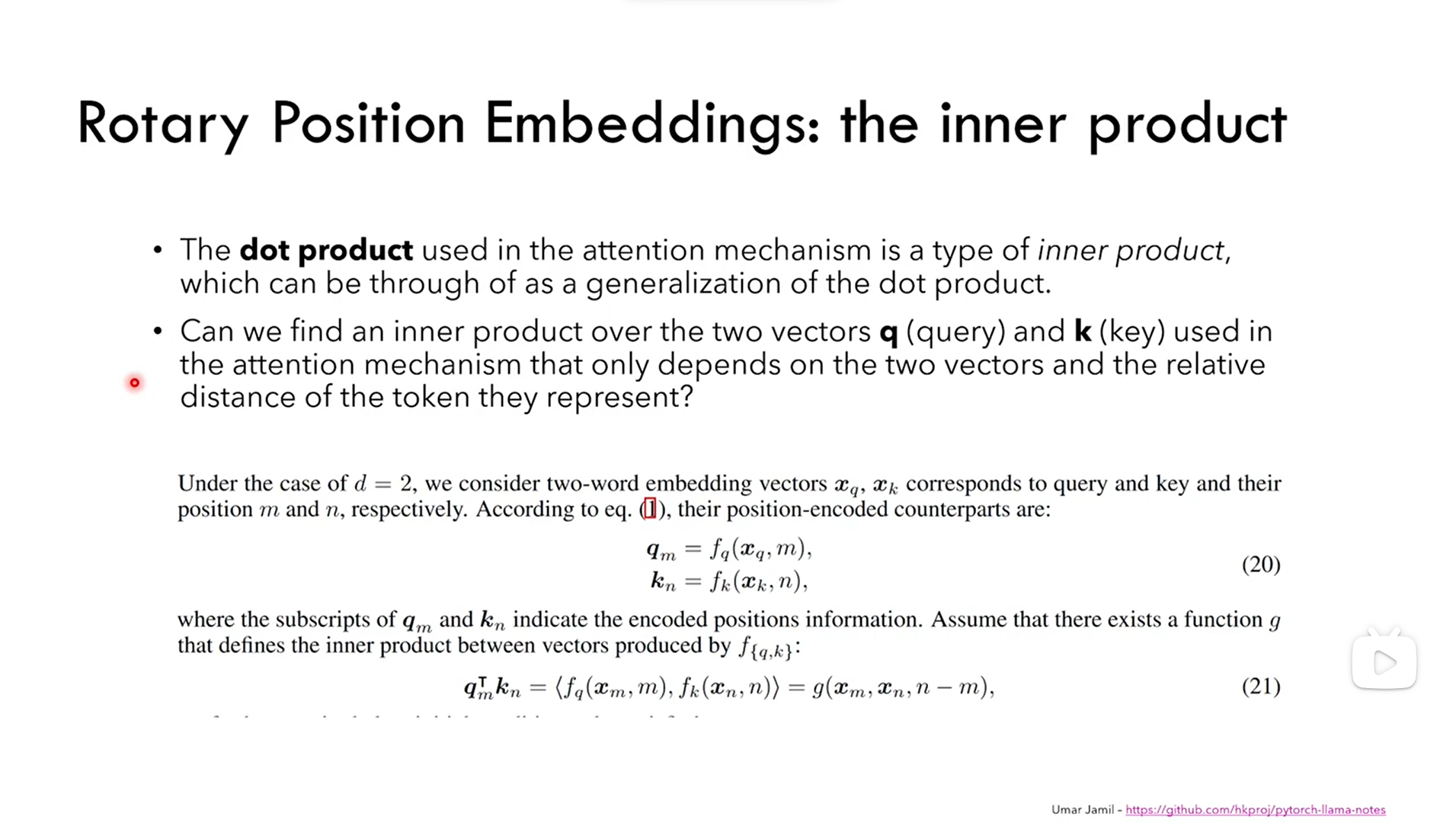

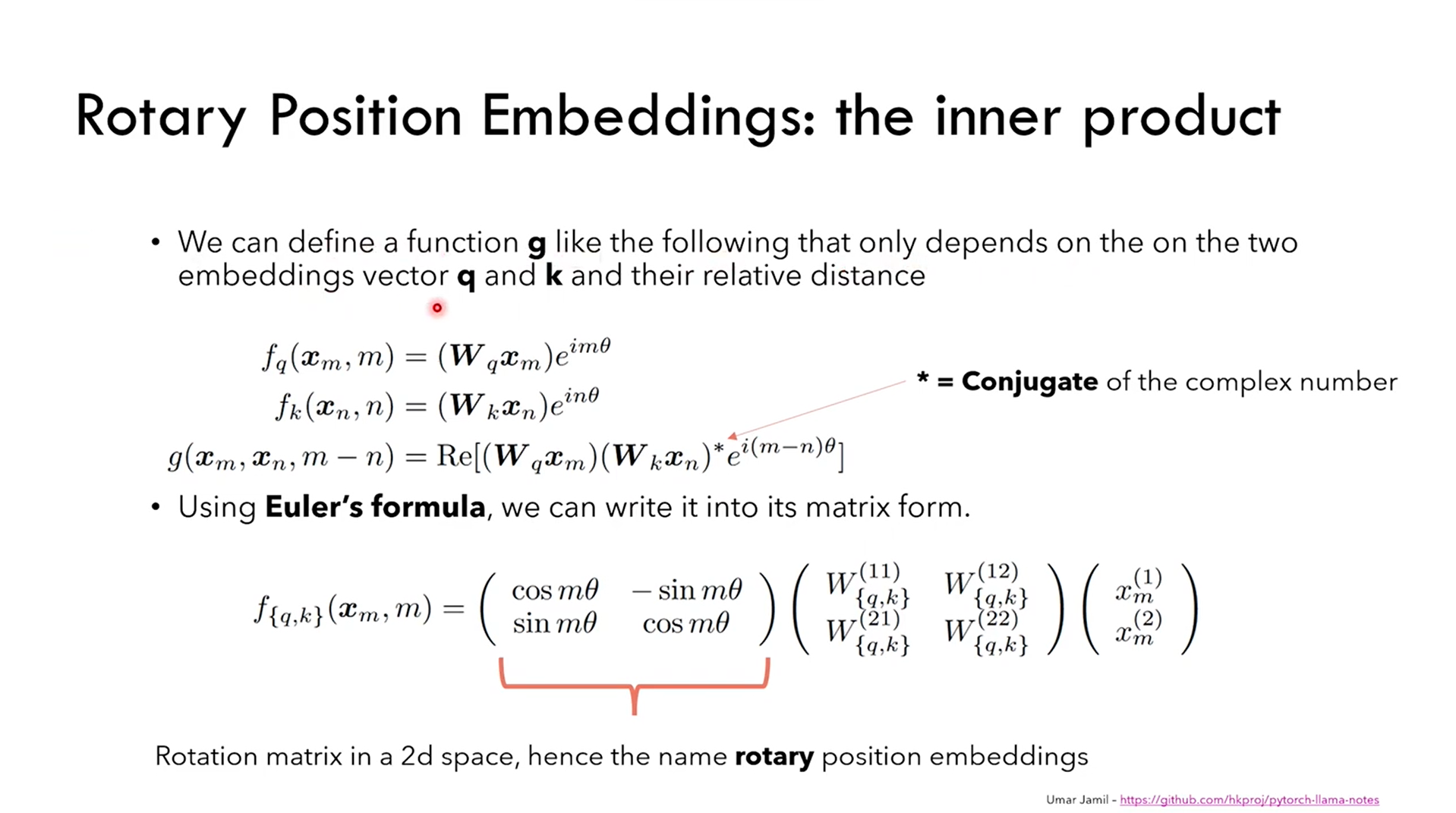

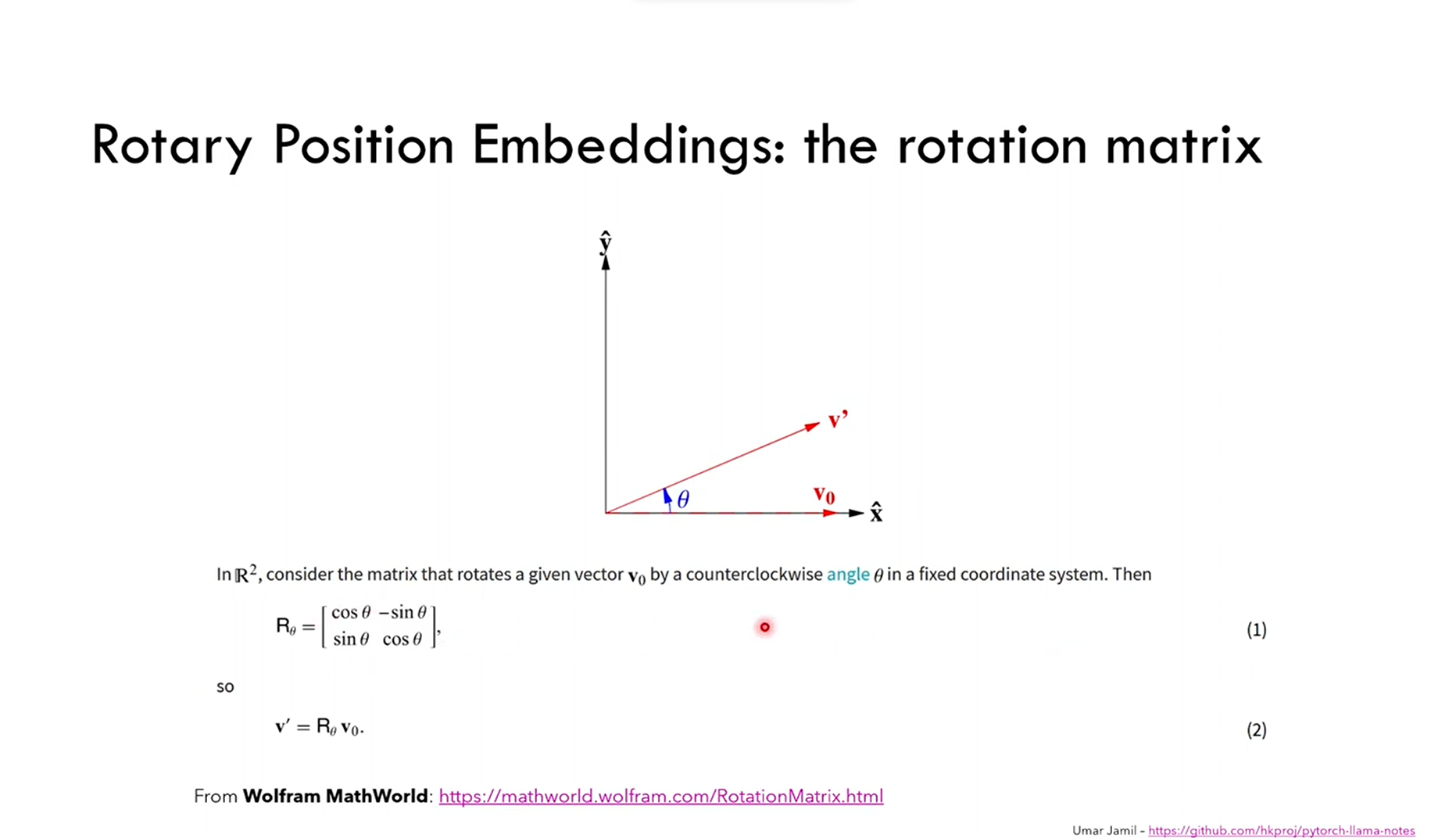

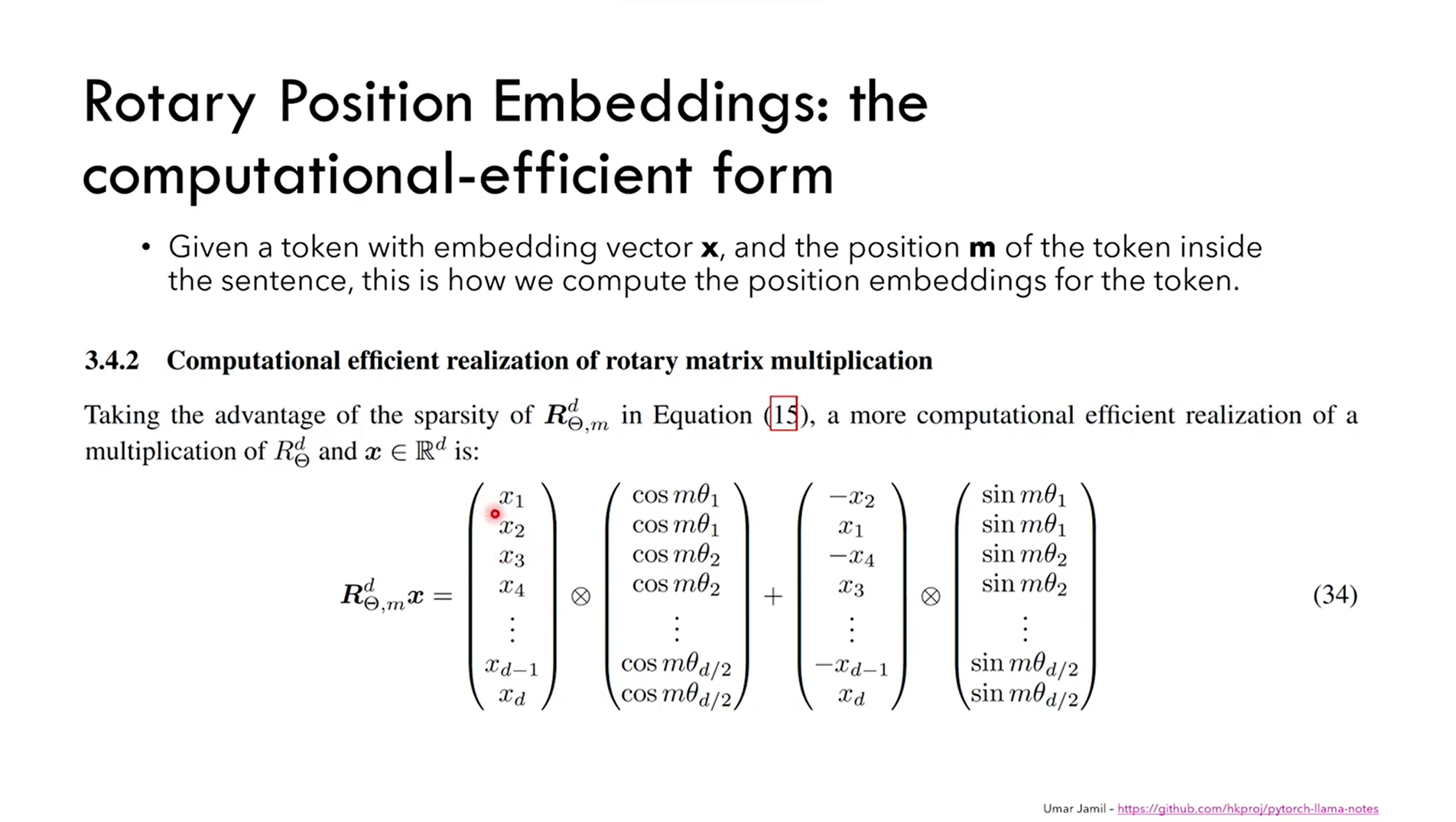

Rotary Position Embedding

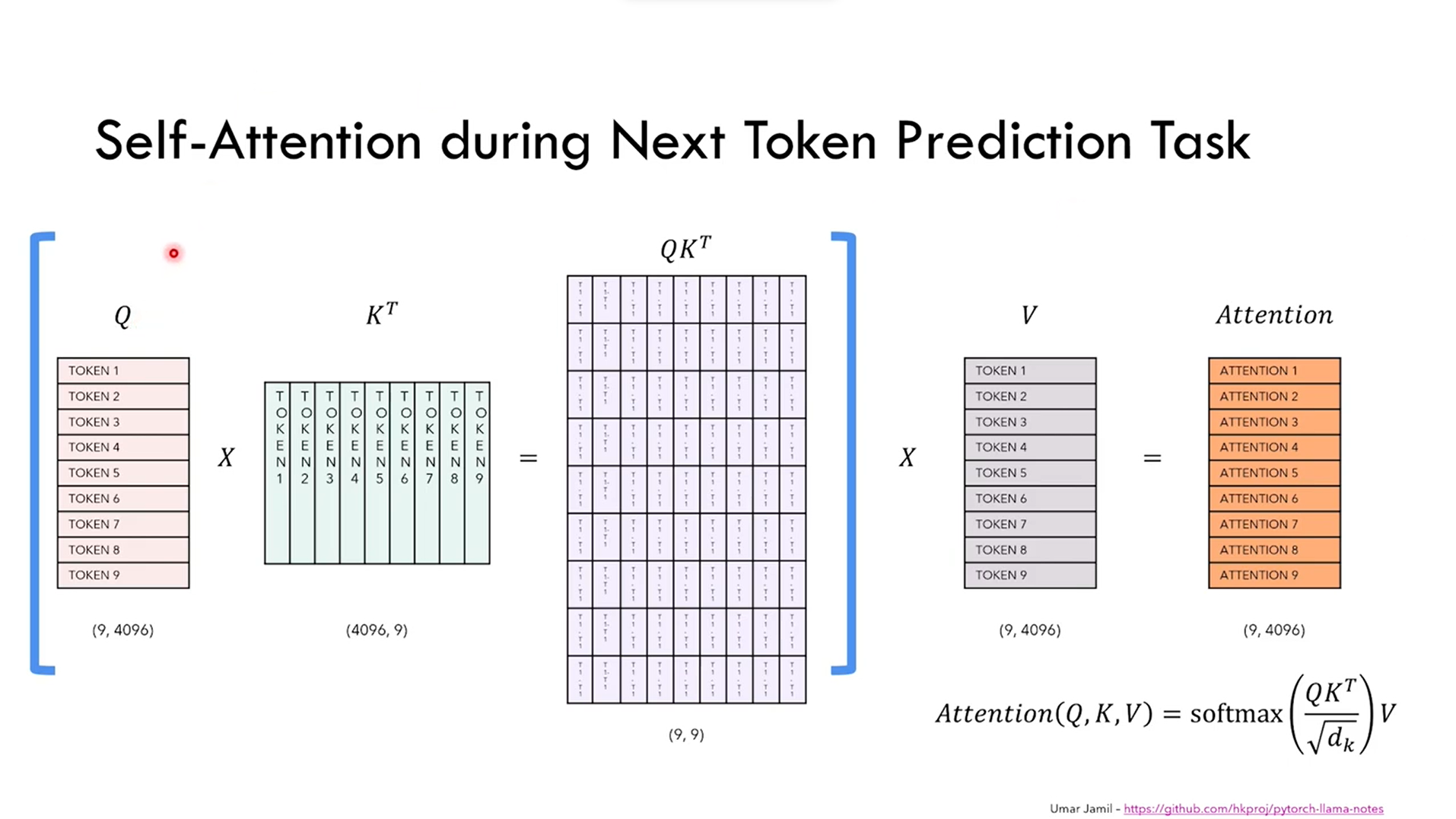

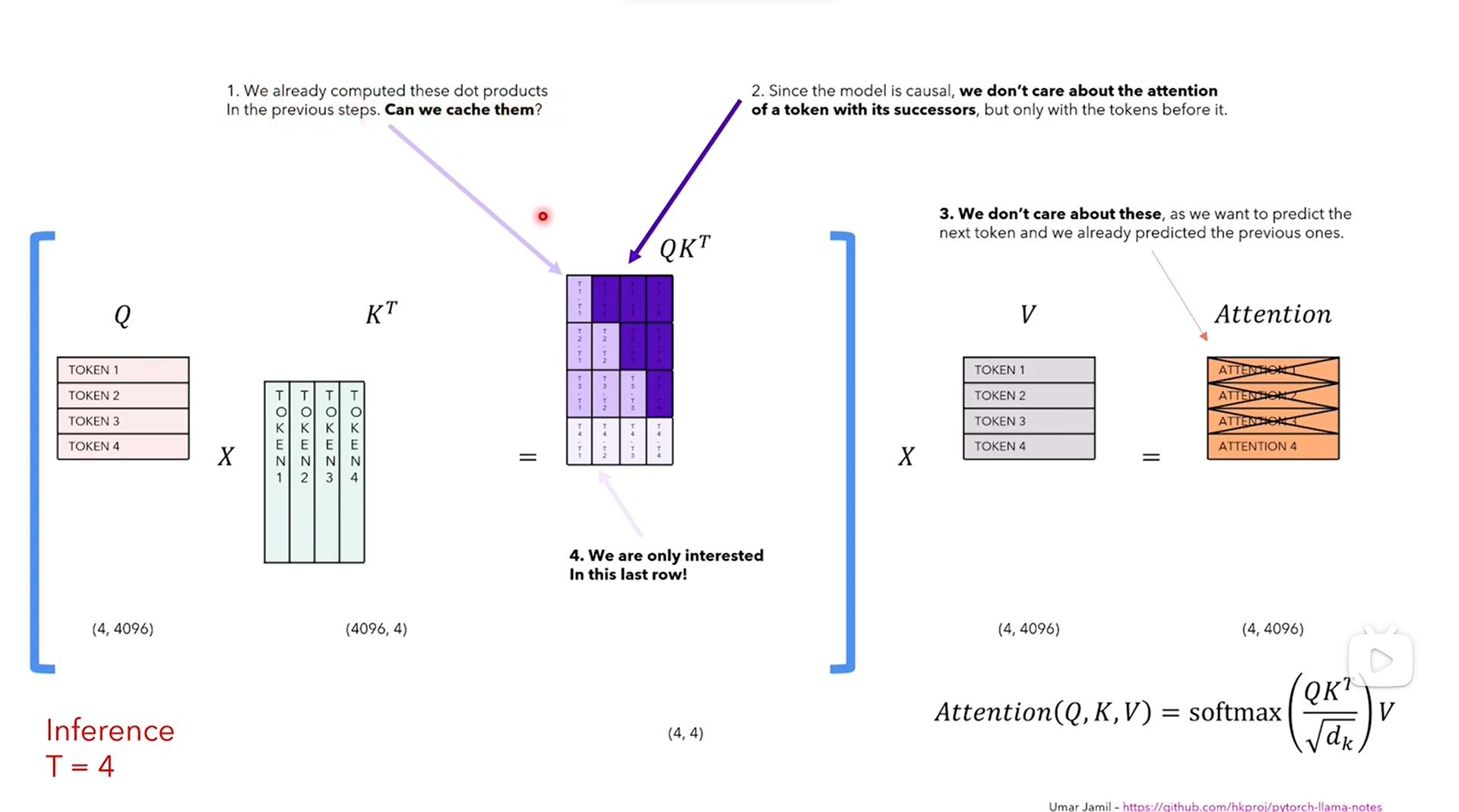

self attention

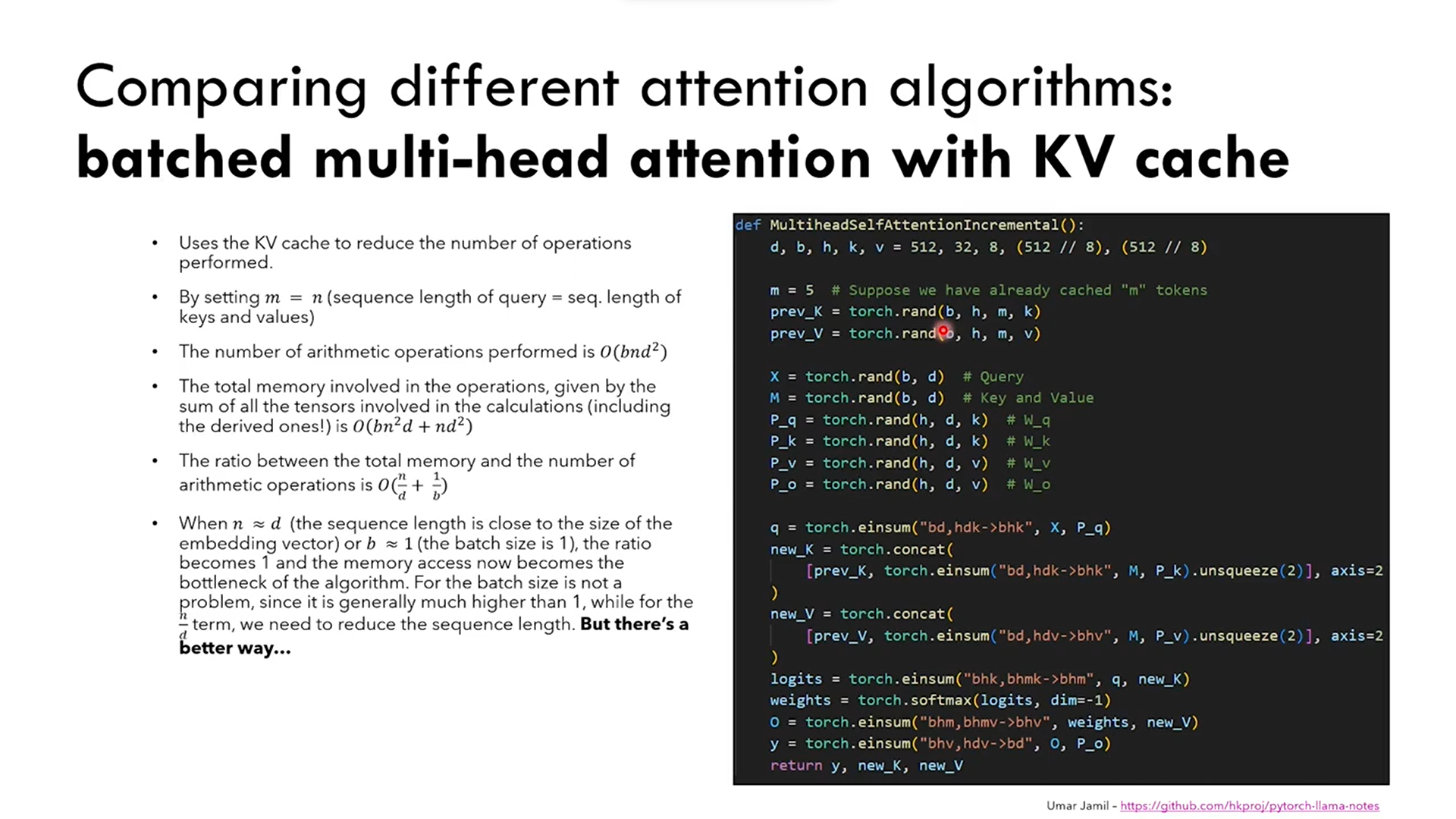

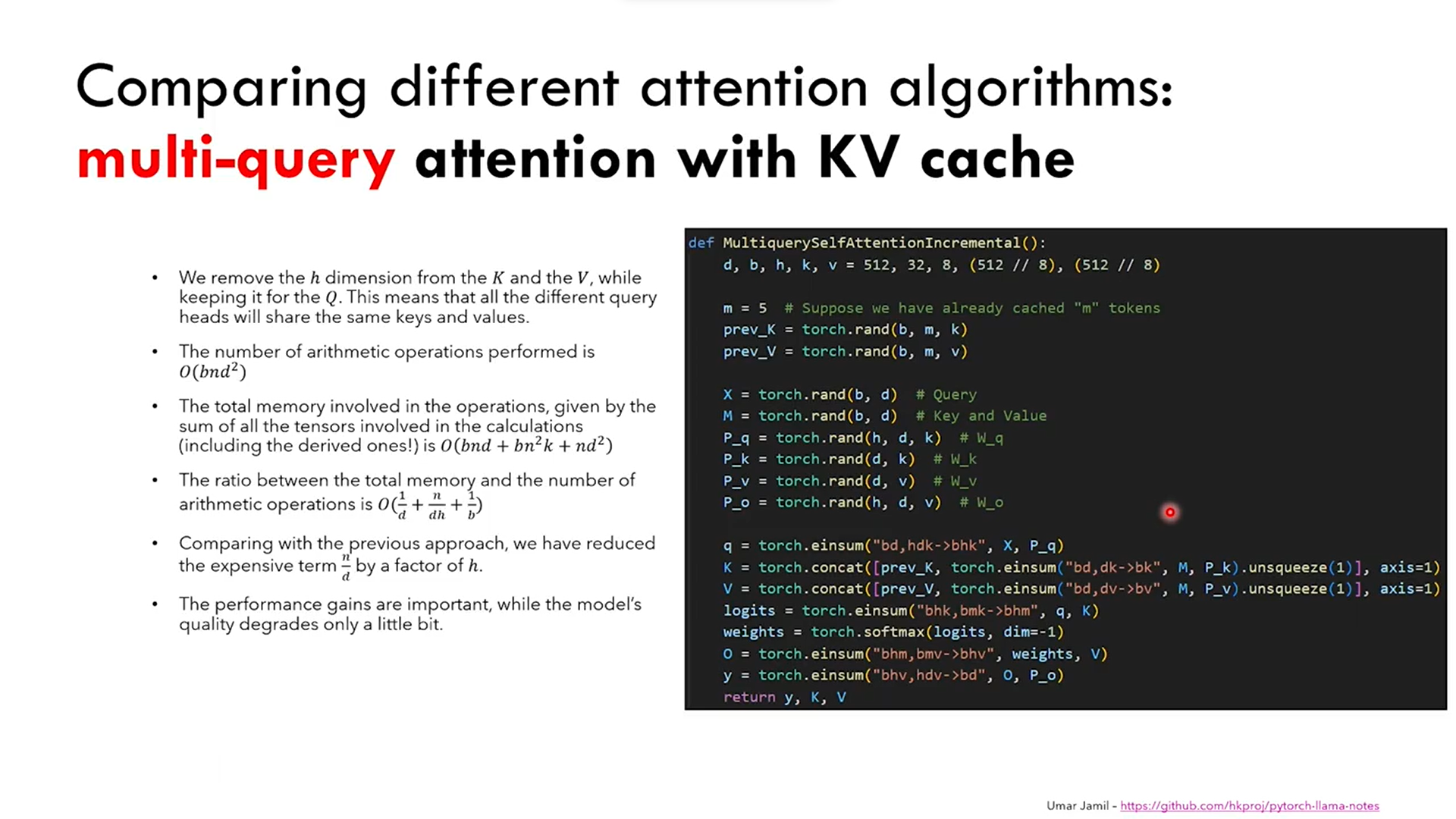

KV cache

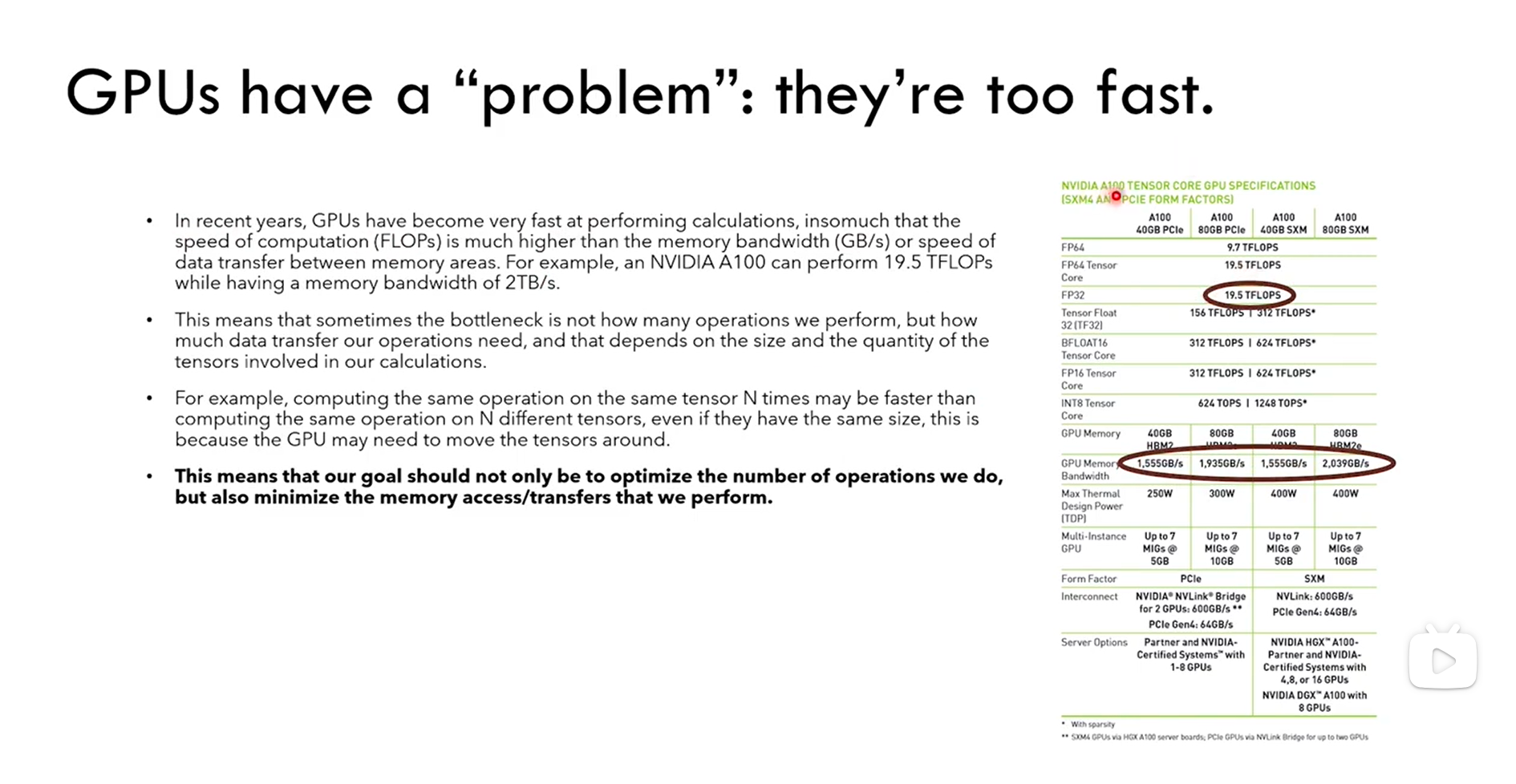

GPU problem

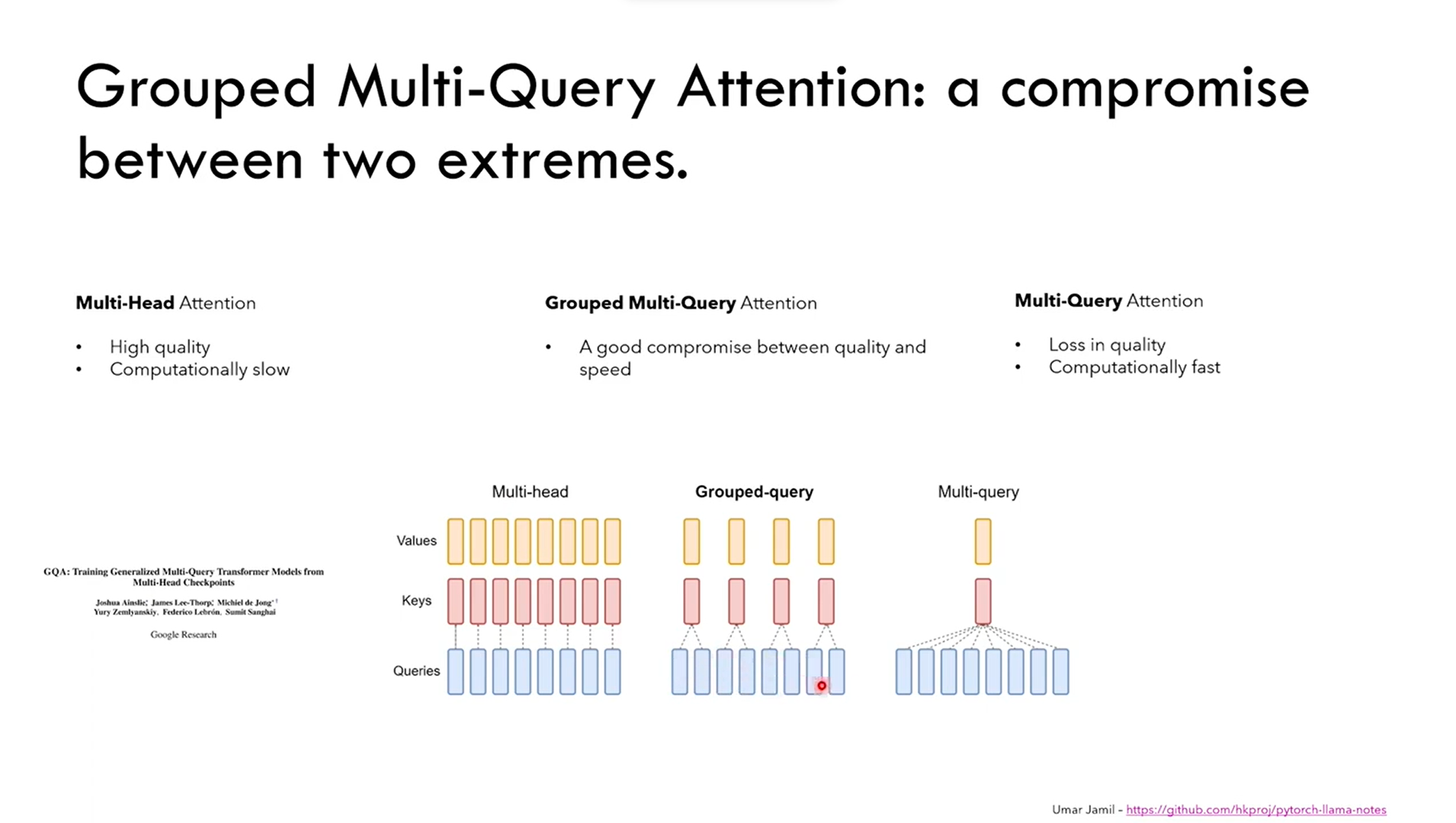

Multi query attention

SwiGLU activation

GLU variants improve transformer